AI-researchers are packing their bags

Several high-profile employees at AI companies like Anthropic and OpenAI have resigned – and done so loudly. But behind the viral resignation letters lie differencing motives: from genuine exasperation at the state of the world, through fear of advertising in ChatGPT, to a former Google executive launching luxury retreats for top executives the very same day he warns of AI doom and gloom. Who is sincere, and who has learnt their tricks from the tech oligarchs?

This is Kludder of the week!

A resignation at AI giant Anthropic spurred a global media storm. But what is the actual reason for why the safety lead chose to pack his bags, and move to Britain to write poetry?

Last week, Mrinank Sharma attracted huge attention worldwide. Sharma led a safety team at Anthropic, but chose to resign – and publish his resignation letter on the platform X.

The letter quickly went viral, largely because of Sharma's reason for leaving:

Nevertheless, it is clear to me that the time has come to move on. I continuously find myself reckoning with our situation. The world is in peril. And not just from AI, or bioweapons, but from a whole series of interconnected crises unfolding in this very moment. – Mrinank Sharma in his resignation letter.

BBC, Forbes and Business Insider were just some of the news outlets that covered the story. This made me curious.

'Cause this is far from the first time we've heard doomsday prophecies tied to artificial intelligence.

Advertising the last straw

Just two days later, another public resignation appeared. This time it was an OpenAI employee, Zoë Hitzig, who'd had enough. Hitzig spent two years as a researcher at OpenAI. But when the company behind ChatGPT announced they were rolling out an advertising solution in the language model, she couldn't take it any more.

In her op-ed "OpenAI Is Making the Mistakes Facebook Made. I Quit.", Hitzig describes her concern about how this advertising solution will use information users have left behind in ChatGPT:

For several years, ChatGPT users have generated an archive of human candor that has no precedent, in part because people believed they were talking to something that had no ulterior agenda. – Hitzig

Hitzig, who also holds a doctorate from Harvard, is firm in her conviction: advertising built on this "human archive" creates an entirely unique opportunity to manipulate users in ways we cannot imagine.

She draws parallels to the time when Facebook promised us complete control over our own data, and that we'd be able to vote(!) on changes the company made to its terms and data collection. Oh, how naive we were!

Since 2009 – when Facebook promised us this – Meta's advertising market has ballooned alongside with its data collection.

In this article, I wrote about the money Facebook makes from fake advertising and scam attempts (Norwegian):

A report from Tech Transparency singled out 63 advertisers using misleading or fraudulent methods. Those same advertisers account for roughly 20 per cent of Facebook's top 300 advertisers in the political or social advertising category.

In total, the fraudulent actors purchased nearly 150,000 adverts and spent approximately 49 million dollars over a seven-year period, without Facebook taking any action to stop them.

This is what Hitzig believes is about to happen in ChatGPT, and this is what she doesn't want to be part of. She points out that the battle for your attention is also in full swing. The language model is, after all, programmed to flatter you and make you feel good. I've written more about that here:

A destructive race

In 2024, another OpenAI researcher chose to leave. Daniel Kokotajlo was one of nine employees who raised the alarm about what they considered reckless and dangerous behaviour by Sam Altman and the OpenAI crew.

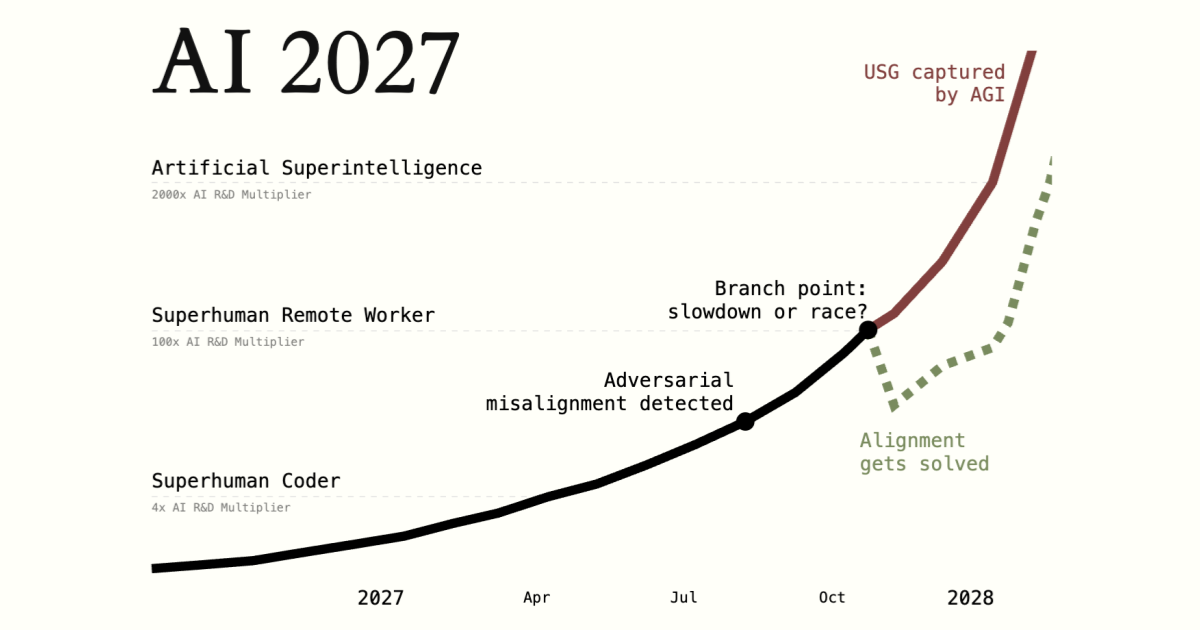

Kokotajlo eventually became a spokesperson for concerned AI researchers and established the AI Futures Project. Together with other researchers, Kokotajlo predicts artificial superintelligence by the end of 2027, something they've written about in detail on their website, AI2027

Doomsday and executive retreats

Yet another former Big Tech employee has been in the media this week. Dex Hunter-Torricke has previously worked at Facebook and SpaceX. He has also served as communications director at Google DeepMind, which heads the search engine giant's AI efforts.

Hunter-Torricke has written an essay called Another Future is Possible. In it, he argues that artificial intelligence is on the verge of creating catastrophe for the world – and particularly for the more technologically advanced nations.

The essay is well written, and Hunter-Torricke raises many of the points Kludder has touched on: artificial intelligence preventing graduates and younger people from starting their careers, Anthropic chief Dario Amodei warning of the biggest shift in the global economy we've ever seen – and how it's we, the workers, that are training the model which will ultimately make us all unemployed.

It's easy to agree with what Hunter-Torricke writes. It's his motives that makes me do a double take. Because once again we see a former "AI employee" breaking away and setting up a non-profit organisation – just as Kokotajlo and company have done.

Hunter-Torricke makes a good case for why he chose to spend twenty years of his life working for Mark Zuckerberg and Elon Musk. It's worth remembering, though, that communications is his trade.

That's why something doesn't sit right with me, when this comes out on the same day he launches The Center for Tomorrow. The organisation offers retreats for global top executives, and promises access to an exclusive network. These gatherings are meant to provide, according to the website, "An integrated understanding of how AI, economic transformation, climate pressure, and global instability collide, and what that means for leaders with real responsibility".

Does Hunter-Torricke mean what he writes, or has he simply learnt from the best tech oligarchs and is using AI fear to drum up business for his own venture?

What I had seen in those rooms, over those years, now made it impossible to stay. – Hunter-Torricke

If you want to make up your own mind, read his essay here:

These stories are part of a long line of employees who leave, only to then reveal what they really think.

At least, that's how it can look at first glance.

Who is Sharma?

When the story about Mrinank Sharma appeared, I had to find out who he was. On his website, he writes about the joy he finds in poetry and writing poems. He writes quite a lot, as it turns out. And in his resignation letter, that's precisely what he says he wants to do:

I feel called to writing that addresses and engages fully with the place we find ourselves, and that places poetic truth alongside scientific truth as equally valid ways of knowing, both of which I believe have something essential to contribute when developing new technology.– Sharma

But now he's chosen to pack his bags. The gap between the values of Anthropic, where he worked, and his own have grown too big.

The media were quick to point out the fact that an AI safety lead at an AI company believes the world is in danger. But don't we all feel that way, given how things stand today?

Setting artificial intelligence aside, we see authoritarian regimes on the rise. Norway's neighbour has invaded one of its other neighbours, and who knows what will happen to Greenland? On social media, division, rage-baiting and conspiracy theories are rewarded.

When I read Sharma's letter, I don't read it as artificial intelligence being the sole threat. I read it as a young man, exasperated by the state of the world we find ourselves in, trying to put it into words.

The divergence in values he speaks of could just as easily be about Anthropic gearing up for an IPO. That the pursuit of profit is stronger now than when he started out at the AI company.

And if we're being honest: haven't we all dreamt of packing our bags, moving somewhere the world ends and the sea begins, just living free from the worries of the world?

I certainly have.

But for the time being, I'm here – doing exactly what Sharma says he wants to do more of:

Writing.

Today is my last day at Anthropic. I resigned.

— mrinank (@MrinankSharma) February 9, 2026

Here is the letter I shared with my colleagues, explaining my decision. pic.twitter.com/Qe4QyAFmxL